Psychoacoustics

Psychoacoustics is the scientific study of sound perception and audiology – how humans perceive various sounds. More specifically, it is the branch of science studying the psychological and physiological responses associated with sound (including noise, speech and music). It can be further categorized as a branch of psychophysics. Psychoacoustics received its name from a field within psychology—i.e., recognition science—which deals with all kinds of human perceptions. It is an interdisciplinary field of many areas, including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science.[1]

Contents

1 Background

2 Limits of perception

3 Sound localization

4 Masking effects

5 Missing fundamental

6 Software

7 Music

8 Applied psychoacoustics

9 See also

9.1 Related fields

9.2 Psychoacoustic topics

10 References

10.1 Notes

10.2 Sources

11 External links

Background

Hearing is not a purely mechanical phenomenon of wave propagation, but is also a sensory and perceptual event; in other words, when a person hears something, that something arrives at the ear as a mechanical sound wave traveling through the air, but within the ear it is transformed into neural action potentials. The outer hair cells (OHC) of a mammalian cochlea give rise to an enhanced sensitivity and better[clarification needed] frequency resolution of the mechanical response of the cochlear partition. These nerve pulses then travel to the brain where they are perceived. Hence, in many problems in acoustics, such as for audio processing, it is advantageous to take into account not just the mechanics of the environment, but also the fact that both the ear and the brain are involved in a person’s listening experience.

The inner ear, for example, does significant signal processing in converting sound waveforms into neural stimuli, so certain differences between waveforms may be imperceptible.[2]Data compression techniques, such as MP3, make use of this fact.[3] In addition, the ear has a nonlinear response to sounds of different intensity levels; this nonlinear response is called loudness. Telephone networks and audio noise reduction systems make use of this fact by nonlinearly compressing data samples before transmission, and then expanding them for playback.[4] Another effect of the ear's nonlinear response is that sounds that are close in frequency produce phantom beat notes, or intermodulation distortion products.[5]

The term "psychoacoustics" also arises in discussions about cognitive psychology and the effects that personal expectations, prejudices, and predispositions may have on listeners' relative evaluations and comparisons of sonic aesthetics and acuity and on listeners' varying determinations about the relative qualities of various musical instruments and performers. The expression that one "hears what one wants (or expects) to hear" may pertain in such discussions.[citation needed]

Limits of perception

An equal-loudness contour. Note peak sensitivity around 2–4 kHz, in the middle of the voice frequency band.

The human ear can nominally hear sounds in the range 20 Hz (0.02 kHz) to 20,000 Hz (20 kHz). The upper limit tends to decrease with age; most adults are unable to hear above 16 kHz. The lowest frequency that has been identified as a musical tone is 12 Hz under ideal laboratory conditions.[6] Tones between 4 and 16 Hz can be perceived via the body's sense of touch.

Frequency resolution of the ear is 3.6 Hz within the octave of 1000 – 2000 Hz. That is, changes in pitch larger than 3.6 Hz can be perceived in a clinical setting.[6] However, even smaller pitch differences can be perceived through other means. For example, the interference of two pitches can often be heard as a repetitive variation in volume of the tone. This amplitude modulation occurs with a frequency equal to the difference in frequencies of the two tones and is known as beating.

The semitone scale used in Western musical notation is not a linear frequency scale but logarithmic. Other scales have been derived directly from experiments on human hearing perception, such as the mel scale and Bark scale (these are used in studying perception, but not usually in musical composition), and these are approximately logarithmic in frequency at the high-frequency end, but nearly linear at the low-frequency end.

The intensity range of audible sounds is enormous. Human ear drums are sensitive to variations in the sound pressure, and can detect pressure changes from as small as a few micropascals (µPa) to greater than 100 kPa. For this reason, sound pressure level is also measured logarithmically, with all pressures referenced to 20 µPa (or 1.97385×10−10atm). The lower limit of audibility is therefore defined as 0 dB, but the upper limit is not as clearly defined. The upper limit is more a question of the limit where the ear will be physically harmed or with the potential to cause noise-induced hearing loss.

A more rigorous exploration of the lower limits of audibility determines that the minimum threshold at which a sound can be heard is frequency dependent. By measuring this minimum intensity for testing tones of various frequencies, a frequency dependent absolute threshold of hearing (ATH) curve may be derived. Typically, the ear shows a peak of sensitivity (i.e., its lowest ATH) between 1–5 kHz, though the threshold changes with age, with older ears showing decreased sensitivity above 2 kHz.[7]

The ATH is the lowest of the equal-loudness contours. Equal-loudness contours indicate the sound pressure level (dB SPL), over the range of audible frequencies, that are perceived as being of equal loudness. Equal-loudness contours were first measured by Fletcher and Munson at Bell Labs in 1933 using pure tones reproduced via headphones, and the data they collected are called Fletcher–Munson curves. Because subjective loudness was difficult to measure, the Fletcher–Munson curves were averaged over many subjects.

Robinson and Dadson refined the process in 1956 to obtain a new set of equal-loudness curves for a frontal sound source measured in an anechoic chamber. The Robinson-Dadson curves were standardized as ISO 226 in 1986. In 2003, ISO 226 was revised as equal-loudness contour using data collected from 12 international studies.

Sound localization

Sound localization is the process of determining the location of a sound source. The brain utilizes subtle differences in loudness, tone and timing between the two ears to allow us to localize sound sources.[8] Localization can be described in terms of three-dimensional position: the azimuth or horizontal angle, the zenith or vertical angle, and the distance (for static sounds) or velocity (for moving sounds).[9] Humans, as most four-legged animals, are adept at detecting direction in the horizontal, but less so in the vertical due to the ears being placed symmetrically. Some species of owls have their ears placed asymmetrically, and can detect sound in all three planes, an adaption to hunt small mammals in the dark.[10]

Masking effects

Audio masking graph

Suppose a listener cannot hear a given acoustical signal under silent condition. When a signal is playing while another sound is being played (a masker) the signal has to be stronger for the listener to hear it. The masker does not need to have the frequency components of the original signal for masking to happen. A masked signal can be heard even though it is weaker than the masker. Masking happens when a signal and a masker are played together. It also happens when a masker starts after a signal stops playing. The effects of backward masking is weaker than forward masking. The masking effect has been widely used in psychoacoustical research. With masking you can change the levels of the masker and measure the threshold, then create a diagram of a psychophysical tuning curve that will reveal similar features. Masking effects are also used for audio encoding. The masking effect is used in lossy encoders. It can eliminate some of the weaker sounds, so the listener can not hear the difference. This technique has been used in MP3's.

Missing fundamental

When presented with a harmonic series of frequencies in the relationship 2f, 3f, 4f, 5f, etc. (where f is a specific frequency), humans tend to perceive that the pitch is f.

Software

Perceptual audio coding uses psychoacoustics-based algorithms.

The psychoacoustic model provides for high quality lossy signal compression by describing which parts of a given digital audio signal can be removed (or aggressively compressed) safely—that is, without significant losses in the (consciously) perceived quality of the sound.

It can explain how a sharp clap of the hands might seem painfully loud in a quiet library, but is hardly noticeable after a car backfires on a busy, urban street. This provides great benefit to the overall compression ratio, and psychoacoustic analysis routinely leads to compressed music files that are 1/10th to 1/12th the size of high quality masters, but with discernibly less proportional quality loss. Such compression is a feature of nearly all modern lossy audio compression formats. Some of these formats include Dolby Digital (AC-3), MP3, Opus, Ogg Vorbis, AAC, WMA, MPEG-1 Layer II (used for digital audio broadcasting in several countries) and ATRAC, the compression used in MiniDisc and some Walkman models.

Psychoacoustics is based heavily on human anatomy, especially the ear's limitations in perceiving sound as outlined previously. To summarize, these limitations are:

- High frequency limit

- Absolute threshold of hearing

- Temporal masking

- Simultaneous masking

Given that the ear will not be at peak perceptive capacity when dealing with these limitations, a compression algorithm can assign a lower priority to sounds outside the range of human hearing. By carefully shifting bits away from the unimportant components and toward the important ones, the algorithm ensures that the sounds a listener is most likely to perceive are of the highest quality.

Music

Psychoacoustics includes topics and studies that are relevant to music psychology and music therapy. Theorists such as Benjamin Boretz consider some of the results of psychoacoustics to be meaningful only in a musical context.[11]

Irv Teibel's Environments series LPs (1969–79) are an early example of commercially available sounds released expressly for enhancing psychological abilities.[12]

Applied psychoacoustics

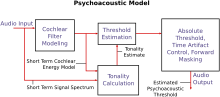

Psychoacoustics Model

Psychoacoustics has long enjoyed a symbiotic relationship with computer science, computer engineering, and computer networking. Internet pioneers J. C. R. Licklider and Bob Taylor both completed graduate-level work in psychoacoustics, while BBN Technologies originally specialized in consulting on acoustics issues before it began building the first packet-switched computer networks.

Licklider wrote a paper entitled "A duplex theory of pitch perception".[13]

Psychoacoustics is applied within many fields from software development, where developers map proven and experimental mathematical patterns; in digital signal processing, where many audio compression codecs such as MP3 and Opus use a psychoacoustic model to increase compression ratios; in the design of (high end) audio systems for accurate reproduction of music in theatres and homes; as well as defense systems where scientists have experimented with limited success in creating new acoustic weapons, which emit frequencies that may impair, harm, or kill.[14] Psychoacoustics are also leveraged in sonification to make multiple independent dimensions audible and easily interpretable[15]. It is also applied today within music, where musicians and artists continue to create new auditory experiences by masking unwanted frequencies of instruments, causing other frequencies to be enhanced. Yet another application is in the design of small or lower-quality loudspeakers, which can use the phenomenon of missing fundamentals to give the effect of bass notes at lower frequencies than the loudspeakers are physically able to produce (see references).

See also

Related fields

- Cognitive neuroscience of music

- Music psychology

Psychoacoustic topics

A-weighting, a commonly used perceptual loudness transfer function- ABX test

- Auditory illusions

Auditory scene analysis incl. 3D-sound perception, localisation- Binaural beats

- Blind signal separation

- Deutsch's Scale illusion

Equivalent rectangular bandwidth (ERB)- Franssen effect

- Glissando illusion

- Haas effect

- Hypersonic effect

- Language processing

- Levitin effect

- Misophonia

- Musical tuning

- Noise health effects

- Octave illusion

- Pitch (music)

- Precedence effect

- Psycholinguistics

- Rate-distortion theory

- Sound localization

- Sound of fingernails scraping chalkboard

- Sound masking

- Speech recognition

- Timbre

- Tritone paradox

References

Notes

^ Ballou, G (2008). Handbook for Sound Engineers (Fourth ed.). Burlington: Focal Press. p. 43..mw-parser-output cite.citationfont-style:inherit.mw-parser-output .citation qquotes:"""""""'""'".mw-parser-output .citation .cs1-lock-free abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/6/65/Lock-green.svg/9px-Lock-green.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .citation .cs1-lock-limited a,.mw-parser-output .citation .cs1-lock-registration abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/d/d6/Lock-gray-alt-2.svg/9px-Lock-gray-alt-2.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .citation .cs1-lock-subscription abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/a/aa/Lock-red-alt-2.svg/9px-Lock-red-alt-2.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registrationcolor:#555.mw-parser-output .cs1-subscription span,.mw-parser-output .cs1-registration spanborder-bottom:1px dotted;cursor:help.mw-parser-output .cs1-ws-icon abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/4/4c/Wikisource-logo.svg/12px-Wikisource-logo.svg.png")no-repeat;background-position:right .1em center.mw-parser-output code.cs1-codecolor:inherit;background:inherit;border:inherit;padding:inherit.mw-parser-output .cs1-hidden-errordisplay:none;font-size:100%.mw-parser-output .cs1-visible-errorfont-size:100%.mw-parser-output .cs1-maintdisplay:none;color:#33aa33;margin-left:0.3em.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registration,.mw-parser-output .cs1-formatfont-size:95%.mw-parser-output .cs1-kern-left,.mw-parser-output .cs1-kern-wl-leftpadding-left:0.2em.mw-parser-output .cs1-kern-right,.mw-parser-output .cs1-kern-wl-rightpadding-right:0.2em

^ Christopher J. Plack (2005). The Sense of Hearing. Routledge. ISBN 978-0-8058-4884-7.

^ Lars Ahlzen; Clarence Song (2003). The Sound Blaster Live! Book. No Starch Press. ISBN 978-1-886411-73-9.

^ Rudolf F. Graf (1999). Modern dictionary of electronics. Newnes. ISBN 978-0-7506-9866-5.

^ Jack Katz; Robert F. Burkard & Larry Medwetsky (2002). Handbook of Clinical Audiology. Lippincott Williams & Wilkins. ISBN 978-0-683-30765-8.

^ ab Olson, Harry F. (1967). Music, Physics and Engineering. Dover Publications. pp. 248–251. ISBN 978-0-486-21769-7.

^ Fastl, Hugo; Zwicker, Eberhard (2006). Psychoacoustics: Facts and Models. Springer. pp. 21–22. ISBN 978-3-540-23159-2.

^ Thompson, Daniel M. Understanding Audio: Getting the Most out of Your Project or Professional Recording Studio. Boston, MA: Berklee, 2005. Print.

^ Roads, Curtis. The Computer Music Tutorial. Cambridge, MA: MIT, 2007. Print.

^ Lewis, D.P. (2007): Owl ears and hearing. Owl Pages [Online]. Available: http://www.owlpages.com/articles.php?section=Owl+Physiology&title=Hearing [2011, April 5]

^ Sterne, Jonathan (2003). The Audible Past: Cultural Origins of Sound Reproduction. Durham: Duke University Press.

^ Cummings, Jim. "Irv Teibel died this week: Creator of 1970s "Environments" LPs". Earth Ear. Retrieved 18 November 2015.

^ Rappold, Raychel. Biography. Rochester University. Retrieved 2015-08-08.

^ "Archived copy". Archived from the original on 2010-07-19. Retrieved 2010-02-06.CS1 maint: Archived copy as title (link)

^ Ziemer, Tim; Schultheis, Holger; Black, David; Kikinis, Ron (2018). "Psychoacoustical Interactive Sonification for Short Range Navigation". Acta Acustica United with Acustica. 104 (6): 1075–1093. doi:10.3813/AAA.919273. Retrieved 31 January 2019.

Sources

.mw-parser-output .refbeginfont-size:90%;margin-bottom:0.5em.mw-parser-output .refbegin-hanging-indents>ullist-style-type:none;margin-left:0.mw-parser-output .refbegin-hanging-indents>ul>li,.mw-parser-output .refbegin-hanging-indents>dl>ddmargin-left:0;padding-left:3.2em;text-indent:-3.2em;list-style:none.mw-parser-output .refbegin-100font-size:100%

- E. Larsen and R.M. Aarts (2004), Audio Bandwidth extension. Application of Psychoacoustics, Signal Processing and Loudspeaker Design., J. Wiley.

Larsen E.; Aarts R.M. (March 2002). "Reproducing Low-pitched Signals through Small Loudspeakers" (PDF). Journal of the Audio Engineering Society. 50 (3): 147–64.

[dead link]

Oohashi T.; Kawai N.; Nishina E.; Honda M.; Yagi R.; Nakamura S.; Morimoto M.; Maekawa T.; Yonekura Y.; Shibasaki H. (February 2006). "The Role of Biological System other Than Auditory Air-conduction in the Emergence of the Hypersonic Effect". Brain Research. 1073-1074: 339–347. doi:10.1016/j.brainres.2005.12.096. PMID 16458271.blah

External links

| Wikimedia Commons has media related to Psychoacoustics. |

- The Musical Ear—Perception of Sound

Müller C, Schnider P, Persterer A, Opitz M, Nefjodova MV, Berger M (1993). "[Applied psychoacoustics in space flight]". Wien Med Wochenschr (in German). 143 (23–24): 633–5. PMID 8178525.—Simulation of Free-field Hearing by Head Phones- GPSYCHO—An Open-source Psycho-Acoustic and Noise-Shaping Model for ISO-Based MP3 Encoders.

- Definition of: perceptual audio coding

- Java appletdemonstrating masking

- HyperPhysics Concepts—Sound and Hearing

- The MP3 as Standard Object