Ethernet

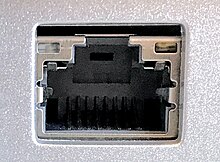

A twisted pair cable with an 8P8C modular connector attached to a laptop computer, used for Ethernet

An Ethernet over twisted pair port

Ethernet /ˈiːθərnɛt/ is a family of computer networking technologies commonly used in local area networks (LAN), metropolitan area networks (MAN) and wide area networks (WAN).[1] It was commercially introduced in 1980 and first standardized in 1983 as IEEE 802.3,[2] and has since been refined to support higher bit rates and longer link distances. Over time, Ethernet has largely replaced competing wired LAN technologies such as Token Ring, FDDI and ARCNET.

The original 10BASE5 Ethernet uses coaxial cable as a shared medium, while the newer Ethernet variants use twisted pair and fiber optic links in conjunction with switches. Over the course of its history, Ethernet data transfer rates have been increased from the original 2.94 megabits per second (Mbit/s)[3] to the latest 400 gigabits per second (Gbit/s). The Ethernet standards comprise several wiring and signaling variants of the OSI physical layer in use with Ethernet.

Systems communicating over Ethernet divide a stream of data into shorter pieces called frames. Each frame contains source and destination addresses, and error-checking data so that damaged frames can be detected and discarded; most often, higher-layer protocols trigger retransmission of lost frames. As per the OSI model, Ethernet provides services up to and including the data link layer.[4]

Since its commercial release, Ethernet has retained a good degree of backward compatibility. Features such as the 48-bit MAC address and Ethernet frame format have influenced other networking protocols. The primary alternative for some uses of contemporary LANs is Wi-Fi, a wireless protocol standardized as IEEE 802.11.[5]

Contents

1 History

2 Standardization

3 Evolution

3.1 Shared media

3.2 Repeaters and hubs

3.3 Bridging and switching

3.4 Advanced networking

4 Varieties

5 Frame structure

6 Autonegotiation

7 Error conditions

7.1 Switching loop

7.2 Jabber

7.3 Runt frames

8 See also

9 Notes

10 References

11 Further reading

12 External links

History

Accton Etherpocket-SP parallel port Ethernet adapter (circa 1990). Supports both coaxial (10BASE2) and twisted pair (10BASE-T) cables. Power is drawn from a PS/2 port passthrough cable.

Ethernet was developed at Xerox PARC between 1973 and 1974.[6][7] It was inspired by ALOHAnet, which Robert Metcalfe had studied as part of his PhD dissertation.[8] The idea was first documented in a memo that Metcalfe wrote on May 22, 1973, where he named it after the luminiferous aether once postulated to exist as an "omnipresent, completely-passive medium for the propagation of electromagnetic waves."[6][9][10] In 1975, Xerox filed a patent application listing Metcalfe, David Boggs, Chuck Thacker, and Butler Lampson as inventors.[11] In 1976, after the system was deployed at PARC, Metcalfe and Boggs published a seminal paper.[12][a] That same year, Ron Crane, Bob Garner, and Roy Ogus facilitated the upgrade from the original 2.94 Mbit/s protocol to the 10 Mbit/s protocol which was released to the market in 1980.[14]

Metcalfe left Xerox in June 1979 to form 3Com.[6][15] He convinced Digital Equipment Corporation (DEC), Intel, and Xerox to work together to promote Ethernet as a standard. As part of that process Xerox agreed to relinquish their 'Ethernet' trademark.[16] The first standard was published on September 30, 1980 as "The Ethernet, A Local Area Network. Data Link Layer and Physical Layer Specifications". This so-called DIX standard (Digital Intel Xerox) specified 10 Mbit/s Ethernet, with 48-bit destination and source addresses and a global 16-bit Ethertype-type field.[17] Version 2 was published in November, 1982[18] and defines what has become known as Ethernet II. Formal standardization efforts proceeded at the same time and resulted in the publication of IEEE 802.3 on June 23, 1983.[2]

Ethernet initially competed with Token Ring and other proprietary protocols. Ethernet was able to adapt to market realities and shift to inexpensive thin coaxial cable and then ubiquitous twisted pair wiring. By the end of the 1980s, Ethernet was clearly the dominant network technology.[6] In the process, 3Com became a major company. 3Com shipped its first 10 Mbit/s Ethernet 3C100 NIC in March 1981, and that year started selling adapters for PDP-11s and VAXes, as well as Multibus-based Intel and Sun Microsystems computers.[19]:9 This was followed quickly by DEC's Unibus to Ethernet adapter, which DEC sold and used internally to build its own corporate network, which reached over 10,000 nodes by 1986, making it one of the largest computer networks in the world at that time.[20] An Ethernet adapter card for the IBM PC was released in 1982, and, by 1985, 3Com had sold 100,000.[15]Parallel port based Ethernet adapters were produced for a time, with drivers for DOS and Windows. By the early 1990s, Ethernet became so prevalent that it was a must-have feature for modern computers, and Ethernet ports began to appear on some PCs and most workstations. This process was greatly sped up with the introduction of 10BASE-T and its relatively small modular connector, at which point Ethernet ports appeared even on low-end motherboards.

Since then, Ethernet technology has evolved to meet new bandwidth and market requirements.[21] In addition to computers, Ethernet is now used to interconnect appliances and other personal devices.[6] As Industrial Ethernet it is used in industrial applications and is quickly replacing legacy data transmission systems in the world's telecommunications networks.[22] By 2010, the market for Ethernet equipment amounted to over $16 billion per year.[23]

Standardization

An Intel 82574L Gigabit Ethernet NIC, PCI Express ×1 card

In February 1980, the Institute of Electrical and Electronics Engineers (IEEE) started project 802 to standardize local area networks (LAN).[15][24] The "DIX-group" with Gary Robinson (DEC), Phil Arst (Intel), and Bob Printis (Xerox) submitted the so-called "Blue Book" CSMA/CD specification as a candidate for the LAN specification.[17] In addition to CSMA/CD, Token Ring (supported by IBM) and Token Bus (selected and henceforward supported by General Motors) were also considered as candidates for a LAN standard. Competing proposals and broad interest in the initiative led to strong disagreement over which technology to standardize. In December 1980, the group was split into three subgroups, and standardization proceeded separately for each proposal.[15]

Delays in the standards process put at risk the market introduction of the Xerox Star workstation and 3Com's Ethernet LAN products. With such business implications in mind, David Liddle (General Manager, Xerox Office Systems) and Metcalfe (3Com) strongly supported a proposal of Fritz Röscheisen (Siemens Private Networks) for an alliance in the emerging office communication market, including Siemens' support for the international standardization of Ethernet (April 10, 1981). Ingrid Fromm, Siemens' representative to IEEE 802, quickly achieved broader support for Ethernet beyond IEEE by the establishment of a competing Task Group "Local Networks" within the European standards body ECMA TC24. On March 1982, ECMA TC24 with its corporate members reached an agreement on a standard for CSMA/CD based on the IEEE 802 draft.[19]:8 Because the DIX proposal was most technically complete and because of the speedy action taken by ECMA which decisively contributed to the conciliation of opinions within IEEE, the IEEE 802.3 CSMA/CD standard was approved in December 1982.[15] IEEE published the 802.3 standard as a draft in 1983 and as a standard in 1985.[25]

Approval of Ethernet on the international level was achieved by a similar, cross-partisan action with Fromm as the liaison officer working to integrate with International Electrotechnical Commission (IEC) Technical Committee 83 (TC83) and International Organization for Standardization (ISO) Technical Committee 97 Sub Committee 6 (TC97SC6). The ISO 8802-3 standard was published in 1989.[26]

Evolution

| Internet protocol suite |

|---|

Application layer |

|

Transport layer |

|

Internet layer |

|

Link layer |

|

Ethernet has evolved to include higher bandwidth, improved medium access control methods, and different physical media. The coaxial cable was replaced with point-to-point links connected by Ethernet repeaters or switches.[27]

Ethernet stations communicate by sending each other data packets: blocks of data individually sent and delivered. As with other IEEE 802 LANs, each Ethernet station is given a 48-bit MAC address. The MAC addresses are used to specify both the destination and the source of each data packet. Ethernet establishes link-level connections, which can be defined using both the destination and source addresses. On reception of a transmission, the receiver uses the destination address to determine whether the transmission is relevant to the station or should be ignored. A network interface normally does not accept packets addressed to other Ethernet stations.[b] Adapters come programmed with a globally unique address.[c]

An EtherType field in each frame is used by the operating system on the receiving station to select the appropriate protocol module (e.g., an Internet Protocol version such as IPv4). Ethernet frames are said to be self-identifying, because of the EtherType field. Self-identifying frames make it possible to intermix multiple protocols on the same physical network and allow a single computer to use multiple protocols together.[28] Despite the evolution of Ethernet technology, all generations of Ethernet (excluding early experimental versions) use the same frame formats.[29] Mixed-speed networks can be built using Ethernet switches and repeaters supporting the desired Ethernet variants.[30]

Due to the ubiquity of Ethernet, the ever-decreasing cost of the hardware needed to support it, and the reduced panel space needed by twisted pair Ethernet, most manufacturers now build Ethernet interfaces directly into PC motherboards, eliminating the need for installation of a separate network card.[31]

Older Ethernet equipment. Clockwise from top-left: An Ethernet transceiver with an in-line 10BASE2 adapter, a similar model transceiver with a 10BASE5 adapter, an AUI cable, a different style of transceiver with 10BASE2 BNC T-connector, two 10BASE5 end fittings (N connectors), an orange "vampire tap" installation tool (which includes a specialized drill bit at one end and a socket wrench at the other), and an early model 10BASE5 transceiver (h4000) manufactured by DEC. The short length of yellow 10BASE5 cable has one end fitted with a N connector and the other end prepared to have a N connector shell installed; the half-black, half-grey rectangular object through which the cable passes is an installed vampire tap.

Ethernet was originally based on the idea of computers communicating over a shared coaxial cable acting as a broadcast transmission medium. The method used was similar to those used in radio systems,[d] with the common cable providing the communication channel likened to the Luminiferous aether in 19th century physics, and it was from this reference that the name "Ethernet" was derived.[32]

Original Ethernet's shared coaxial cable (the shared medium) traversed a building or campus to every attached machine. A scheme known as carrier sense multiple access with collision detection (CSMA/CD) governed the way the computers shared the channel. This scheme was simpler than competing Token Ring or Token Bus technologies.[e] Computers are connected to an Attachment Unit Interface (AUI) transceiver, which is in turn connected to the cable (with thin Ethernet the transceiver is integrated into the network adapter). While a simple passive wire is highly reliable for small networks, it is not reliable for large extended networks, where damage to the wire in a single place, or a single bad connector, can make the whole Ethernet segment unusable.[f]

Through the first half of the 1980s, Ethernet's 10BASE5 implementation used a coaxial cable 0.375 inches (9.5 mm) in diameter, later called "thick Ethernet" or "thicknet". Its successor, 10BASE2, called "thin Ethernet" or "thinnet", used the RG-58 coaxial cable. The emphasis was on making installation of the cable easier and less costly.[33]:57

Since all communication happens on the same wire, any information sent by one computer is received by all, even if that information is intended for just one destination.[g] The network interface card interrupts the CPU only when applicable packets are received: the card ignores information not addressed to it.[h] Use of a single cable also means that the data bandwidth is shared, such that, for example, available data bandwidth to each device is halved when two stations are simultaneously active.[34]

A collision happens when two stations attempt to transmit at the same time. They corrupt transmitted data and require stations to re-transmit. The lost data and re-transmission reduces throughput. In the worst case, where multiple active hosts connected with maximum allowed cable length attempt to transmit many short frames, excessive collisions can reduce throughput dramatically. However, a Xerox report in 1980 studied performance of an existing Ethernet installation under both normal and artificially generated heavy load. The report claimed that 98% throughput on the LAN was observed.[35] This is in contrast with token passing LANs (Token Ring, Token Bus), all of which suffer throughput degradation as each new node comes into the LAN, due to token waits. This report was controversial, as modeling showed that collision-based networks theoretically became unstable under loads as low as 37% of nominal capacity. Many early researchers failed to understand these results. Performance on real networks is significantly better.[36]

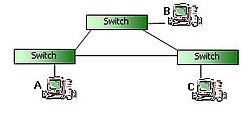

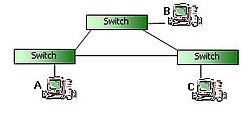

In a modern Ethernet, the stations do not all share one channel through a shared cable or a simple repeater hub; instead, each station communicates with a switch, which in turn forwards that traffic to the destination station. In this topology, collisions are only possible if station and switch attempt to communicate with each other at the same time, and collisions are limited to this link. Furthermore, the 10BASE-T standard introduced a full duplex mode of operation which became common with Fast Ethernet and the de facto standard with Gigabit Ethernet. In full duplex, switch and station can send and receive simultaneously, and therefore modern Ethernets are completely collision-free.

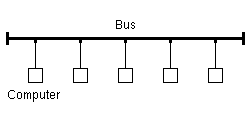

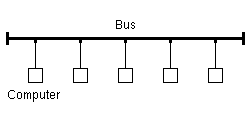

- Comparison between original Ethernet and modern Ethernet

The original Ethernet implementation: shared medium, collision-prone. All computers trying to communicate share the same cable, and so compete with each other.

Modern Ethernet implementation: switched connection, collision-free. Each computer communicates only with its own switch, without competition for the cable with others.

Repeaters and hubs

A 1990s ISA network interface card supporting both coaxial-cable-based 10BASE2 (BNC connector, left) and twisted pair-based 10BASE-T (8P8C connector, right)

For signal degradation and timing reasons, coaxial Ethernet segments have a restricted size.[37] Somewhat larger networks can be built by using an Ethernet repeater. Early repeaters had only two ports, allowing, at most, a doubling of network size. Once repeaters with more than two ports became available, it was possible to wire the network in a star topology. Early experiments with star topologies (called "Fibernet") using optical fiber were published by 1978.[38]

Shared cable Ethernet is always hard to install in offices because its bus topology is in conflict with the star topology cable plans designed into buildings for telephony. Modifying Ethernet to conform to twisted pair telephone wiring already installed in commercial buildings provided another opportunity to lower costs, expand the installed base, and leverage building design, and, thus, twisted-pair Ethernet was the next logical development in the mid-1980s.

Ethernet on unshielded twisted-pair cables (UTP) began with StarLAN at 1 Mbit/s in the mid-1980s. In 1987 SynOptics introduced the first twisted-pair Ethernet at 10 Mbit/s in a star-wired cabling topology with a central hub, later called LattisNet.[15][39][40] These evolved into 10BASE-T, which was designed for point-to-point links only, and all termination was built into the device. This changed repeaters from a specialist device used at the center of large networks to a device that every twisted pair-based network with more than two machines had to use. The tree structure that resulted from this made Ethernet networks easier to maintain by preventing most faults with one peer or its associated cable from affecting other devices on the network.

Despite the physical star topology and the presence of separate transmit and receive channels in the twisted pair and fiber media, repeater-based Ethernet networks still use half-duplex and CSMA/CD, with only minimal activity by the repeater, primarily generation of the jam signal in dealing with packet collisions. Every packet is sent to every other port on the repeater, so bandwidth and security problems are not addressed. The total throughput of the repeater is limited to that of a single link, and all links must operate at the same speed.

Bridging and switching

Patch cables with patch fields of two Ethernet switches

While repeaters can isolate some aspects of Ethernet segments, such as cable breakages, they still forward all traffic to all Ethernet devices. The entire network is one collision domain, and all hosts have to be able to detect collisions anywhere on the network. This limits the number of repeaters between the farthest nodes and creates practical limits on how many machines can communicate on an Ethernet network. Segments joined by repeaters have to all operate at the same speed, making phased-in upgrades impossible.

To alleviate these problems, bridging was created to communicate at the data link layer while isolating the physical layer. With bridging, only well-formed Ethernet packets are forwarded from one Ethernet segment to another; collisions and packet errors are isolated. At initial startup, Ethernet bridges work somewhat like Ethernet repeaters, passing all traffic between segments. By observing the source addresses of incoming frames, the bridge then builds an address table associating addresses to segments. Once an address is learned, the bridge forwards network traffic destined for that address only to the associated segment, improving overall performance. Broadcast traffic is still forwarded to all network segments. Bridges also overcome the limits on total segments between two hosts and allow the mixing of speeds, both of which are critical to incremental deployment of faster Ethernet variants.

In 1989, the networking company Kalpana[i] introduced their EtherSwitch, the first Ethernet switch.[j] Early switches such as this used cut-through switching where only the header of the incoming packet is examined before it is either dropped or forwarded to another segment.[41] This reduces the forwarding latency. One drawback of this method is that it does not readily allow a mixture of different link speeds. Another is that packets that have been corrupted are still propagated through the network. The eventual remedy for this was a return to the original store and forward approach of bridging, where the packet is read into a buffer on the switch in its entirety, its frame check sequence verified and only then packet is forwarded. This process is typically done using application-specific integrated circuits allowing packets to be forwarded at wire speed.

When a twisted pair or fiber link segment is used and neither end is connected to a repeater, full-duplex Ethernet becomes possible over that segment. In full-duplex mode, both devices can transmit and receive to and from each other at the same time, and there is no collision domain. This doubles the aggregate bandwidth of the link and is sometimes advertised as double the link speed (for example, 200 Mbit/s for Fast Ethernet).[k] The elimination of the collision domain for these connections also means that all the link's bandwidth can be used by the two devices on that segment and that segment length is not limited by the need for correct collision detection.

Since packets are typically delivered only to the port they are intended for, traffic on a switched Ethernet is less public than on shared-medium Ethernet. Despite this, switched Ethernet should still be regarded as an insecure network technology, because it is easy to subvert switched Ethernet systems by means such as ARP spoofing and MAC flooding.

The bandwidth advantages, the improved isolation of devices from each other, the ability to easily mix different speeds of devices and the elimination of the chaining limits inherent in non-switched Ethernet have made switched Ethernet the dominant network technology.[42]

Advanced networking

A core Ethernet switch

Simple switched Ethernet networks, while a great improvement over repeater-based Ethernet, suffer from single points of failure, attacks that trick switches or hosts into sending data to a machine even if it is not intended for it, scalability and security issues with regard to switching loops, broadcast radiation and multicast traffic, and bandwidth choke points where a lot of traffic is forced down a single link.[citation needed]

Advanced networking features in switches use shortest path bridging (SPB) or the spanning-tree protocol (STP) to maintain a loop-free, meshed network, allowing physical loops for redundancy (STP) or load-balancing (SPB). Advanced networking features also ensure port security, provide protection features such as MAC lockdown and broadcast radiation filtering, use virtual LANs to keep different classes of users separate while using the same physical infrastructure, employ multilayer switching to route between different classes, and use link aggregation to add bandwidth to overloaded links and to provide some redundancy.

Shortest path bridging includes the use of the link-state routing protocol IS-IS to allow larger networks with shortest path routes between devices. In 2012, it was stated by David Allan and Nigel Bragg, in 802.1aq Shortest Path Bridging Design and Evolution: The Architect's Perspective that shortest path bridging is one of the most significant enhancements in Ethernet's history.[43]

Ethernet has replaced InfiniBand as the most popular system interconnect of TOP500 supercomputers.[44]

Varieties

The Ethernet physical layer evolved over a considerable time span and encompasses coaxial, twisted pair and fiber-optic physical media interfaces, with speeds from 10 Mbit/s to 100 Gbit/s, with 400 Gbit/s expected by 2018.[45] The first introduction of twisted-pair CSMA/CD was StarLAN, standardized as 802.3 1BASE5.[46] While 1BASE5 had little market penetration, it defined the physical apparatus (wire, plug/jack, pin-out, and wiring plan) that would be carried over to 10BASE-T.

The most common forms used are 10BASE-T, 100BASE-TX, and 1000BASE-T. All three use twisted pair cables and 8P8C modular connectors. They run at 10 Mbit/s, 100 Mbit/s, and 1 Gbit/s, respectively.

Fiber optic variants of Ethernet are also very common in larger networks, offering high performance, better electrical isolation and longer distance (tens of kilometers with some versions). In general, network protocol stack software will work similarly on all varieties.

Frame structure

A close-up of the SMSC LAN91C110 (SMSC 91x) chip, an embedded Ethernet chip.

In IEEE 802.3, a datagram is called a packet or frame. Packet is used to describe the overall transmission unit and includes the preamble, start frame delimiter (SFD) and carrier extension (if present).[l] The frame begins after the start frame delimiter with a frame header featuring source and destination MAC addresses and the EtherType field giving either the protocol type for the payload protocol or the length of the payload. The middle section of the frame consists of payload data including any headers for other protocols (for example, Internet Protocol) carried in the frame. The frame ends with a 32-bit cyclic redundancy check, which is used to detect corruption of data in transit.[47]:sections 3.1.1 and 3.2 Notably, Ethernet packets have no time-to-live field, leading to possible problems in the presence of a switching loop.

Autonegotiation

Autonegotiation is the procedure by which two connected devices choose common transmission parameters, e.g. speed and duplex mode. Autonegotiation is an optional feature, first introduced with 100BASE-TX, while it is also backward compatible with 10BASE-T. Autonegotiation is mandatory for 1000BASE-T and faster.

Error conditions

Switching loop

A switching loop or bridge loop occurs in computer networks when there is more than one Layer 2 (OSI model) path between two endpoints (e.g. multiple connections between two network switches or two ports on the same switch connected to each other). The loop creates broadcast storms as broadcasts and multicasts are forwarded by switches out every port, the switch or switches will repeatedly rebroadcast the broadcast messages flooding the network. Since the Layer 2 header does not support a time to live (TTL) value, if a frame is sent into a looped topology, it can loop forever.

A physical topology that contains switching or bridge loops is attractive for redundancy reasons, yet a switched network must not have loops. The solution is to allow physical loops, but create a loop-free logical topology using the shortest path bridging (SPB) protocol or the older spanning tree protocols (STP) on the network switches.

Jabber

A node that is sending longer than the maximum transmission window for an Ethernet packet is considered to be jabbering. Depending on the physical topology, jabber detection and remedy differ somewhat.

- An MAU is required to detect and stop abnormally long transmission from the DTE (longer than 20–150 ms) in order to prevent permanent network disruption.[48]

- On an electrically shared medium (10BASE5, 10BASE2, 1BASE5), jabber can only be detected by each end node, stopping reception. No further remedy is possible.[49]

- A repeater/repeater hub uses a jabber timer that ends retransmission to the other ports when it expires. The timer runs for 25,000 to 50,000 bit times for 1 Mbit/s,[50] 40,000 to 75,000 bit times for 10 and 100 Mbit/s,[51][52] and 80,000 to 150,000 bit times for 1 Gbit/s.[53] Jabbering ports are partitioned off the network until a carrier is no longer detected.[54]

- End nodes utilizing a MAC layer will usually detect an oversized Ethernet frame and cease receiving. A bridge/switch will not forward the frame.[55]

- A non-uniform frame size configuration in the network using jumbo frames may be detected as jabber by end nodes.

- A packet detected as jabber by an upstream repeater and subsequently cut off has an invalid frame check sequence and is dropped.

Runt frames

Runts are packets or frames smaller than the minimum allowed size. They are dropped and not propagated.

See also

- 5-4-3 rule

- Chaosnet

- Ethernet crossover cable

- Fiber media converter

- List of device bit rates

- LocalTalk

- Metro Ethernet

- PHY (chip)

Point-to-point protocol over Ethernet (PPPoE)

Power over Ethernet (PoE)- Sneakernet

Wake-on-LAN (WoL)

Notes

^ The experimental Ethernet described in the 1976 paper ran at 2.94 Mbit/s and has eight-bit destination and source address fields, so the original Ethernet addresses are not the MAC addresses they are today.[13] By software convention, the 16 bits after the destination and source address fields specify a "packet type", but, as the paper says, "different protocols use disjoint sets of packet types". Thus the original packet types could vary within each different protocol. This is in contrast to the EtherType in the IEEE Ethernet standard, which specifies the protocol being used.

^ Unless it is put into promiscuous mode.

^ In some cases, the factory-assigned address can be overridden, either to avoid an address change when an adapter is replaced or to use locally administered addresses.

^ There are fundamental differences between wireless and wired shared-medium communication, such as the fact that it is much easier to detect collisions in a wired system than a wireless system.

^ In a CSMA/CD system packets must be large enough to guarantee that the leading edge of the propagating wave of a message gets to all parts of the medium and back again before the transmitter stops transmitting, guaranteeing that collisions (two or more packets initiated within a window of time that forced them to overlap) are discovered. As a result, the minimum packet size and the physical medium's total length are closely linked.

^ Multipoint systems are also prone to strange failure modes when an electrical discontinuity reflects the signal in such a manner that some nodes would work properly, while others work slowly because of excessive retries or not at all. See standing wave for an explanation. These could be much more difficult to diagnose than a complete failure of the segment.

^ This "one speaks, all listen" property is a security weakness of shared-medium Ethernet, since a node on an Ethernet network can eavesdrop on all traffic on the wire if it so chooses.

^ Unless it is put into promiscuous mode.

^ acquired by Cisco Systems, Inc. in 1994

^ The term switch was invented by device manufacturers and does not appear in the IEEE 802.3 standard.

^ This is misleading, as performance will double only if traffic patterns are symmetrical.

^ The carrier extension is defined to assist collision detection on shared-media gigabit Ethernet.

References

^ Ralph Santitoro (2003). "Metro Ethernet Services – A Technical Overview" (PDF). mef.net. Retrieved 2016-01-09..mw-parser-output cite.citationfont-style:inherit.mw-parser-output .citation qquotes:"""""""'""'".mw-parser-output .citation .cs1-lock-free abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/6/65/Lock-green.svg/9px-Lock-green.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .citation .cs1-lock-limited a,.mw-parser-output .citation .cs1-lock-registration abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/d/d6/Lock-gray-alt-2.svg/9px-Lock-gray-alt-2.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .citation .cs1-lock-subscription abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/a/aa/Lock-red-alt-2.svg/9px-Lock-red-alt-2.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registrationcolor:#555.mw-parser-output .cs1-subscription span,.mw-parser-output .cs1-registration spanborder-bottom:1px dotted;cursor:help.mw-parser-output .cs1-ws-icon abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/4/4c/Wikisource-logo.svg/12px-Wikisource-logo.svg.png")no-repeat;background-position:right .1em center.mw-parser-output code.cs1-codecolor:inherit;background:inherit;border:inherit;padding:inherit.mw-parser-output .cs1-hidden-errordisplay:none;font-size:100%.mw-parser-output .cs1-visible-errorfont-size:100%.mw-parser-output .cs1-maintdisplay:none;color:#33aa33;margin-left:0.3em.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registration,.mw-parser-output .cs1-formatfont-size:95%.mw-parser-output .cs1-kern-left,.mw-parser-output .cs1-kern-wl-leftpadding-left:0.2em.mw-parser-output .cs1-kern-right,.mw-parser-output .cs1-kern-wl-rightpadding-right:0.2em

^ ab "IEEE 802.3 'Standard for Ethernet' Marks 30 Years of Innovation and Global Market Growth" (Press release). IEEE. June 24, 2013. Retrieved January 11, 2014.

^ Xerox (August 1976). "Alto: A Personal Computer System Hardware Manual" (PDF). Xerox. p. 37. Retrieved 25 August 2015.

^ Charles M. Kozierok (2005-09-20). "Data Link Layer (Layer 2)". tcpipguide.com. Retrieved 2016-01-09.

^ Joe Jensen (2007-10-26). "802.11 g: Pros & Cons of a Wireless Network in a Business Environment". networkbits.net. Retrieved 2016-01-09.

^ abcde The History of Ethernet. NetEvents.tv. 2006. Retrieved September 10, 2011.

^ "Ethernet Prototype Circuit Board". Smithsonian National Museum of American History. 1973. Retrieved September 2, 2007.

^ Gerald W. Brock (September 25, 2003). The Second Information Revolution. Harvard University Press. p. 151. ISBN 0-674-01178-3.

^ Cade Metz (March 13, 2009). "Ethernet — anetworking protocolname for the ages: Michelson, Morley, and Metcalfe". The Register: 2. Retrieved March 4, 2013.

^ Mary Bellis. "Inventors of the Modern Computer". About.com. Retrieved September 10, 2011.

^ U.S. Patent 4,063,220 "Multipoint data communication system (with collision detection)"

^ Robert Metcalfe; David Boggs (July 1976). "Ethernet: Distributed Packet Switching for Local Computer Networks" (PDF). Communications of the ACM. 19 (7): 395–405. doi:10.1145/360248.360253.

^ John F. Shoch; Yogen K. Dalal; David D. Redell; Ronald C. Crane (August 1982). "Evolution of the Ethernet Local Computer Network" (PDF). IEEE Computer. 15 (8): 14–26. doi:10.1109/MC.1982.1654107.

^ "Introduction to Ethernet Technologies". www.wband.com. WideBand Products. Retrieved 2018-04-09.

^ abcdef von Burg, Urs; Kenney, Martin (December 2003). "Sponsors, Communities, and Standards: Ethernet vs. Token Ring in the Local Area Networking Business" (PDF). Industry & Innovation. 10 (4): 351–375. doi:10.1080/1366271032000163621. Archived (PDF) from the original on March 22, 2012. Retrieved 17 February 2014.

^ Charles E. Spurgeon (February 2000). "Chapter 1. The Evolution of Ethernet". Ethernet: The Definitive Guide. ISBN 1565926609.

^ ab Digital Equipment Corporation; Intel Corporation; Xerox Corporation (30 September 1980). "The Ethernet, A Local Area Network. Data Link Layer and Physical Layer Specifications, Version 1.0" (PDF). Xerox Corporation. Retrieved 2011-12-10.

^ Digital Equipment Corporation; Intel Corporation; Xerox Corporation (November 1982). "The Ethernet, A Local Area Network. Data Link Layer and Physical Layer Specifications, Version 2.0" (PDF). Xerox Corporation. Retrieved 2011-12-10.

^ ab Robert Breyer; Sean Riley (1999). Switched, Fast, and Gigabit Ethernet. Macmillan. ISBN 1-57870-073-6.

^ Jamie Parker Pearson (1992). Digital at Work. Digital Press. p. 163. ISBN 1-55558-092-0.

^ Rick Merritt (December 20, 2010). "Shifts, growth ahead for 10G Ethernet". E Times. Retrieved September 10, 2011.

^ "My oh My – Ethernet Growth Continues to Soar; Surpasses Legacy". Telecom News Now. July 29, 2011. Retrieved September 10, 2011.

^ Jim Duffy (February 22, 2010). "Cisco, Juniper, HP drive Ethernet switch market in Q4". Network World. Retrieved September 10, 2011.

^ Vic Hayes (August 27, 2001). "Letter to FCC" (PDF). Retrieved October 22, 2010.IEEE 802 has the basic charter to develop and maintain networking standards... IEEE 802 was formed in February 1980...

^ IEEE 802.3-2008, p.iv

^ "ISO 8802-3:1989". ISO. Retrieved 2015-07-08.

^ Jim Duffy (2009-04-20). "Evolution of Ethernet". Network World. Retrieved 2016-01-01.

^ Douglas E. Comer (2000). Internetworking with TCP/IP – Principles, Protocols and Architecture (4th ed.). Prentice Hall. ISBN 0-13-018380-6. 2.4.9 – Ethernet Hardware Addresses, p. 29, explains the filtering.

^ Iljitsch van Beijnum. "Speed matters: how Ethernet went from 3Mbps to 100Gbps... and beyond". Ars Technica. Retrieved July 15, 2011.All aspects of Ethernet were changed: its MAC procedure, the bit encoding, the wiring... only the packet format has remained the same.

^ Fast Ethernet Turtorial, Lantronix, retrieved 2016-01-01

^ Geetaj Channana (November 1, 2004). "Motherboard Chipsets Roundup". PCQuest. Retrieved October 22, 2010.While comparing motherboards in the last issue we found that all motherboards support Ethernet connection on board.

^ Charles E. Spurgeon (2000). Ethernet: The Definitive Guide. O'Reilly. ISBN 978-1-56592-660-8.

^ Heinz-Gerd Hegering; Alfred Lapple (1993). Ethernet: Building a Communications Infrastructure. Addison-Wesley. ISBN 0-201-62405-2.

^ Ethernet Tutorial – Part I: Networking Basics, Lantronix, retrieved 2016-01-01

^ Shoch, John F.; Hupp, Jon A. (December 1980). "Measured performance of an Ethernet local network". Communications of the ACM. ACM Press. 23 (12): 711–721. doi:10.1145/359038.359044. ISSN 0001-0782.

^ Boggs, D.R.; Mogul, J.C. & Kent, C.A. (September 1988). "Measured capacity of an Ethernet: myths and reality" (PDF). DEC WRL.

^ "Ethernet Media Standards and Distances". kb.wisc.edu. Retrieved 2017-10-10.

^ Eric G. Rawson; Robert M. Metcalfe (July 1978). "Fibemet: Multimode Optical Fibers for Local Computer Networks" (PDF). IEEE Transactions on Communications. 26 (7): 983–990. doi:10.1109/TCOM.1978.1094189. Retrieved June 11, 2011.

^

Spurgeon, Charles E. (2000). Ethernet; The Definitive Guide. Nutshell Handbook. O'Reilly. p. 29. ISBN 1-56592-660-9.

^

Urs von Burg (2001). The Triumph of Ethernet: technological communities and the battle for the LAN standard. Stanford University Press. p. 175. ISBN 0-8047-4094-1.

^ Robert J. Kohlhepp (2000-10-02). "The 10 Most Important Products of the Decade". Network Computing. Archived from the original on 2010-01-05. Retrieved 2008-02-25.

^ "Token Ring-to-Ethernet Migration". Cisco. Retrieved October 22, 2010.Respondents were first asked about their current and planned desktop LAN attachment standards. The results were clear—switched Fast Ethernet is the dominant choice for desktop connectivity to the network

^ Allan, David; Bragg, Nigel (2012). 802.1aq Shortest Path Bridging Design and Evolution : The Architects' Perspective. New York: Wiley. ISBN 978-1-118-14866-2.

^ "HIGHLIGHTS – JUNE 2016". June 2016. Retrieved 2016-08-08.InfiniBand technology is now found on 205 systems, down from 235 systems, and is now the second most-used internal system interconnect technology. Gigabit Ethernet has risen to 218 systems up from 182 systems, in large part thanks to 176 systems now using 10G interfaces.

^ "Adopted Timeline" (PDF). IEEE 802.3bs Task Force. 2015-09-18. Retrieved 2017-01-08.

^ "1BASE5 Medium Specification (StarLAN)". cs.nthu.edu.tw. 1996-12-28. Retrieved 2014-11-11.

^ "802.3-2012 – IEEE Standard for Ethernet" (PDF). ieee.org. IEEE Standards Association. 2012-12-28. Retrieved 2014-02-08.

^ IEEE 802.3 8.2 MAU functional specifications

^ IEEE 802.3 8.2.1.5 Jabber function requirements

^ IEEE 802.3 12.4.3.2.3 Jabber function

^ IEEE 802.3 9.6.5 MAU Jabber Lockup Protection

^ IEEE 802.3 27.3.2.1.4 Timers

^ IEEE 802.3 41.2.2.1.4 Timers

^ IEEE 802.3 27.3.1.7 Receive jabber functional requirements

^ IEEE 802.1 Table C-1—Largest frame base values

Further reading

Digital Equipment Corporation; Intel Corporation; Xerox Corporation (September 1980). "The Ethernet: A Local Area Network". ACM SIGCOMM Computer Communication Review. 11 (3): 20. doi:10.1145/1015591.1015594. Version 1.0 of the DIX specification.

"Ethernet Technologies". Internetworking Technology Handbook. Cisco Systems. Retrieved April 11, 2011.

Charles E. Spurgeon (2000). Ethernet: The Definitive Guide. O'Reilly Media. ISBN 978-1565-9266-08.

External links

| Wikimedia Commons has media related to Ethernet. |

- IEEE 802.3 Ethernet working group

- IEEE 802.3-2015 standard